Hi! I am Kaizhen Tan (Chinese name: 谭楷蓁). I am currently a master’s student in Artificial Intelligence at Carnegie Mellon University. I received my bachelor’s degree in Information Systems from Tongji University, where I built a solid foundation in programming, data analysis, and machine learning, complemented by interdisciplinary training in business and organizational systems.

My research sits at the intersection of Urban Science and Human-centered AI. Driven by the vision of harmonizing artificial intelligence with urban ecosystems, I aim to build spatially intelligent and socially aware systems that make cities more adaptive, inclusive, and human-centric.

To realize this vision, my work integrates:

- Paradigms: Robot-Friendly City, Lightweight Urbanization, Self-evolving Urban Digital Twins

- Methodologies: Social Sensing, Geospatial & Spatiotemporal Data Analysis, Computational Social Science, Human-Computer Interaction

- Technologies: LLMs, VLMs, AI Agents, Embodied AI, Spatial Intelligence, World Models, Emerging Devices

Specifically, my research agenda explores four key topics:

🤖 1. Robotic Urbanism & Collaborative Governance

Core Question: How should embodied intelligence move through cities, and how can humans govern and collaborate with it at scale?

- Robot-Friendly Urban Space: Redesign streets and building interiors for robot operations, setting standards for siting, infrastructure, protocols, and responsibility boundaries.

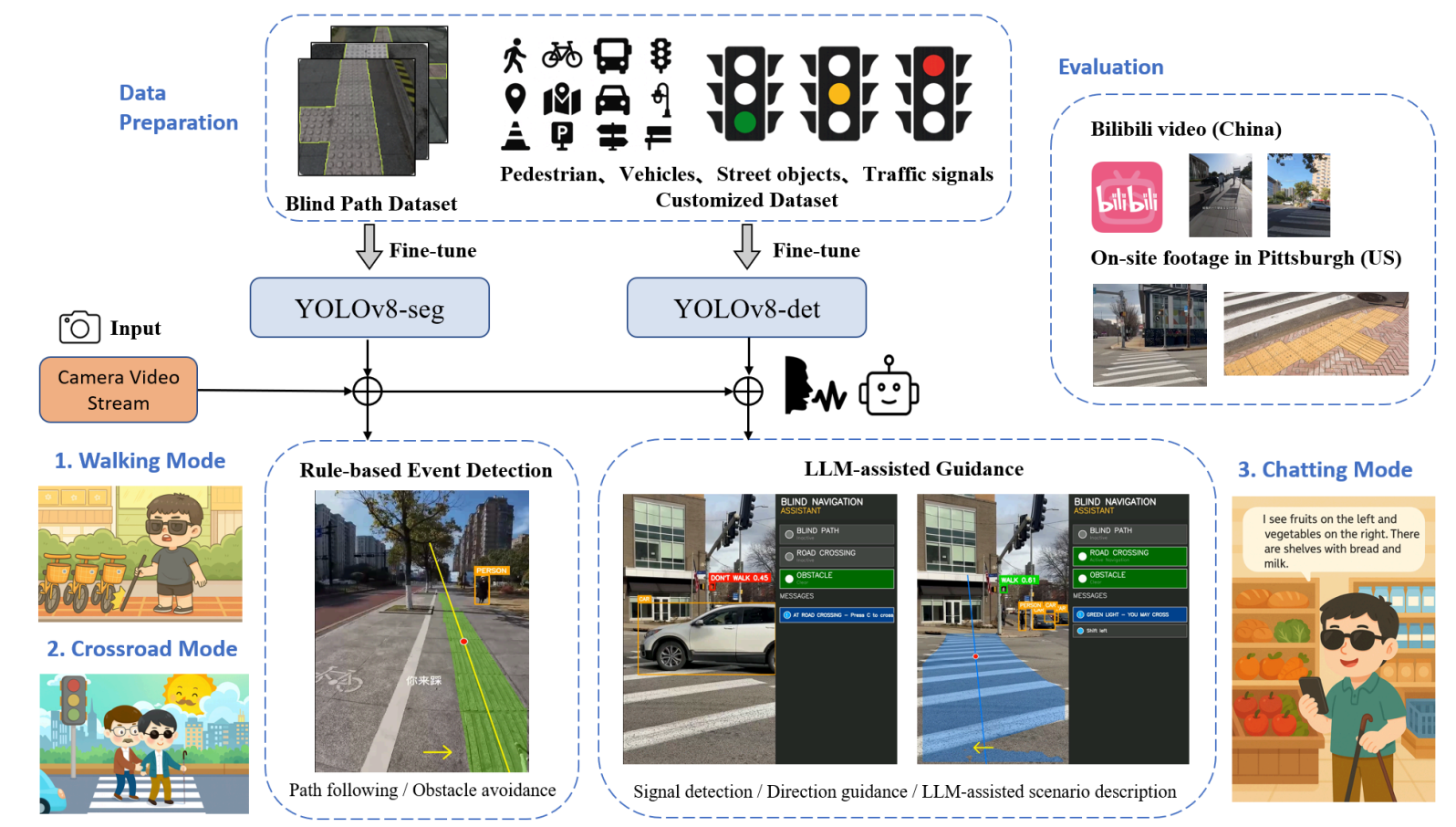

- Embodied Operation: Vision-based navigation and task execution under real urban constraints, with accessibility-aware routing and compliance with local infrastructure and operational rules.

- Low-Altitude Governance: Derive operable air corridors from demand signals, then validate in 3D city models under privacy, noise, crowds, and other effects.

- Emerging Urban Devices & Deployment: Study city-scale rollout of robots, low-altitude systems, wearables, and BCI-like devices, focusing on deployment bottlenecks.

- Public Acceptance & Ethics: Model how different groups perceive risks and capabilities, guiding interaction design, rollout strategy, and public communication.

🎨 2. Social Sensing & Human-Environment Interaction

Core Question: How can multimodal human-centered data translate into actionable insights for urban planning and governance?

- AI-Enhanced Geospatial Analysis: Use large-scale spatial analytics to link urban form, environment, and mobility with human behavior and public service outcomes.

- Accessibility & Pedestrian Experience: Analyze walking experiences and accessibility barriers, integrating disabled people’s mobility needs into urban governance decisions.

- Urban Perception & Visual Aesthetics: Quantify streetscape perception and neighborhood imagery to inform design choices and regeneration priorities.

- Socio-Cultural Signals for Governance: Incorporate place-based narratives into LLM-enabled applications for communication, interaction, and inclusive governance.

🏙️ 3. Self-Evolving Urban Digital Twins and Agents

Core Question: How can we build self-evolving urban digital twin that stays continuously updated, hosts agents, and supports sustainable and equitable city governance?

- Urban Foundation Model: Integrate remote sensing, street-level imagery, mobility trajectory, POI, IoT signal, and text into unified urban representations.

- Measurement & Sensing Pipelines: Develop scalable metrics and updating pipelines, using robots, drones, and wearables as new data sources for continuous urban sensing.

- Localization, Mapping, and 3D City: Geo-localization and semantic mapping across point clouds, meshes, and 3D Gaussian representations for interactive querying and simulation.

- Urban Agents: Build task agents for planning and public services, including map-LLM, spatial RAG, policy QA, and travel assistance.

- Policy Sandbox: Use the twin for what-if simulation, risk assessment, and execution checks, supported by efficient retrieval, caching, and rendering.

🚀 4. Spatial Intelligence & Foundation World Models

Core Question: How can world models support reliable spatial reasoning and decision-making for physical agents?

- World Models & Architectures: Study generative, predictive, and representation-learning paradigms for forecasting and planning in the physical world.

- Embodied Representations: Unify geometry, semantics, physics, and action into shared representations, with a path toward richer embodied modalities.

- Long-term Memory & Self-Evolution: Build lifelong learning mechanisms with stability, forgetting control, and safety constraints for long-horizon autonomy.

- Interpretable Spatial Reasoning: Improve interpretability and robustness of spatial reasoning, exploring 3D-aware encoders and alternatives to standard transformers.

📫 Let's Connect!

- Please feel free to reach out if any of these research directions resonate with you. I’d be happy to chat!

🔥 News

- 2026.01: 🎉 The abstract co-authored with Prof. Fan Zhang has been accepted for the XXV ISPRS Congress 2026. See you in Toronto!

- 2025.12: 🎉 Our paper, led by my senior labmate Dr. Weihua Huan and co-authored with Prof. Wei Huang at Tongji University, was accepted by GIScience & Remote Sensing; honored to contribute as second author and big congratulations to Dr. Huan!

- 2025.10: 🔭 Joined Prof. Yu Liu and Prof. Fan Zhang’s team at Peking University as a remote research assistant.

- 2025.08: 🎉 Delivered an oral presentation at Hong Kong Polytechnic University after our paper was accepted to the Global Smart Cities Summit cum The 4th International Conference on Urban Informatics (GSCS & ICUI 2025).

- 2025.07: 🎉 My undergraduate thesis was accepted by 7th Asia Conference on Machine Learning and Computing (ACMLC 2025).

- 2025.06: 🎓 Graduated from Tongji University—grateful for the journey and excited to continue my studies at CMU.

- 2025.04: 🔭 Completed the SITP project under the supervision of Prof. Yujia Zhai in the College of Architecture and Urban Planning.

- 2025.01: 💼 Joined Shanghai Artificial Intelligence Laboratory as an AI Product Manager Intern.

- 2024.09: 🌏 Conducted research at ASTAR in Singapore under the supervision of Dr. Yicheng Zhang and Dr. Sheng Zhang.

- 2024.04: 🔭 Began my academic journey at Prof. Wei Huang’s lab in the College of Surveying and Geo-Informatics, Tongji University.

📖 Education

💼 Experience

🔭 Research

- 2025.10 - 2026.04, Research Assistant, Institute of Remote Sensing and Geographic Information System, Peking University, China

- 2024.09 - 2024.12, Research Officer, A*STAR Institute for Infocomm Research, Singapore

- 2024.04 - 2025.04, Research Assistant, College of Architecture and Urban Planning, Tongji University, China

- 2024.04 - 2024.12, Research Assistant, College of Surveying and Geo-Informatics, Tongji University, China

💻 Industry

- 2025.01 - 2025.04, AI Product Manager, Shanghai Artificial Intelligence Laboratory, China.

- 2023.01 - 2023.02, Data Analyst, Shanghai Qiantan Emerging Industry Research Institute, China.

📝 Publications

🔬 Projects

💬 Presentations

-

2026.07 - XXV ISPRS Congress 2026

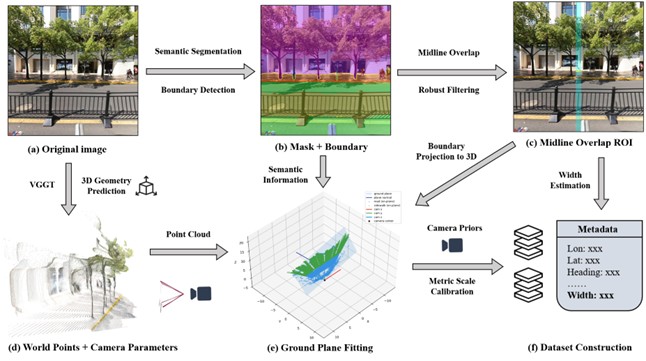

UrbanVGGT: Scalable Sidewalk Width Estimation from Street View Images

Toronto, Canada -

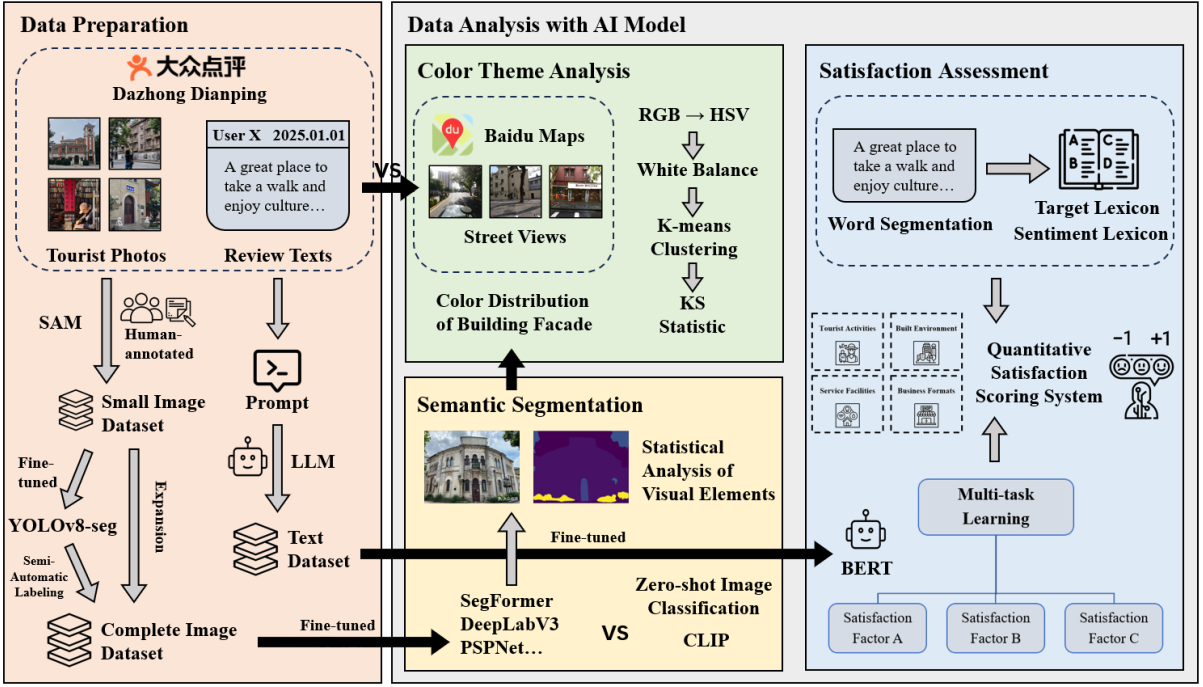

2025.08 - Global Smart Cities Summit cum The 4th International Conference on Urban Informatics (GSCS & ICUI 2025)

A Multidimensional AI-powered Framework for Analyzing Tourist Perception in Historic Urban Quarters: A Case Study in Shanghai

Hong Kong Polytechnic University (PolyU), Hong Kong SAR, China -

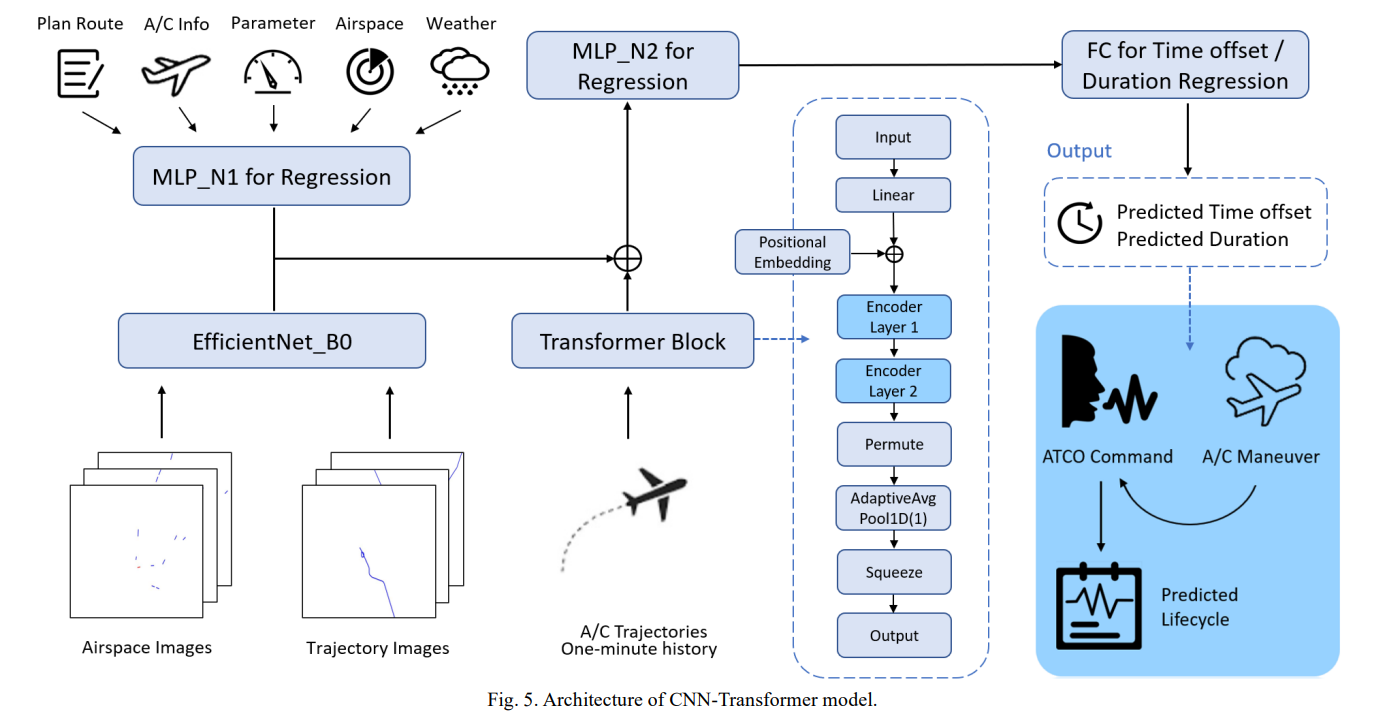

2025.07 - 7th Asia Conference on Machine Learning and Computing (ACMLC 2025)

Multimodal Deep Learning for Modeling Air Traffic Controllers Command Lifecycle and Workload Prediction in Terminal Airspace

Hong Kong SAR, China

📫 Contact

- Email(CMU): kaizhent@cmu.edu

- Email(personal): wflps20140311@gmail.com